Clock to an SoC is like blood to a human body. Just the way blood flows

to each and every part of the body and regulates metabolism, clock

reaches each and every sequential device and controls the digital events

inside the SoC. There are many terms which modern designers use in

relation to the clock and while building the Clock Tree, the backend

team carefully monitors these. Let's have a look at them.

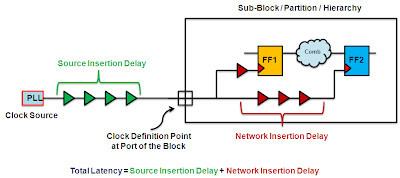

- Clock Latency: Clock Latency is the general term for the delay that the clock signal takes between any two points. It can be from source (PLL) to the sink pin (Clock Pin) of registers or between any two intermediate points. Note that it is a general term and you need to know the context before making any guess about what is exactly meant when someone mentions clock latency.

- Source Insertion Delay: This refers to the clock delay from the clock origin point, which could be the PLL or maybe the IRC (Internal Reference Clock) to the clock definition point.

- Network Insertion Delay: This refers to the clock delay from the clock definition point to the sink pin of the registers.

Consider a hierarchical design where we have multiple people working on

multiple partitions or the sub-modules. So, the tool would be oblivious

about the "top" or any logic outside the block. The block owner would

define a clock at the port of the block (as shown below). And carry out

the physical design activities. He would only see the Network Insertion

Delay and can only model the Source Insertion Delay for the block.

Having discusses the latency, we have now focus our attention to another important clock parameter: The Skew.

We discusses the concept of skew and it's implication on timing in the post: Clock Skew: Implication on Timing.

It would be prudent to go through that post before proceeding further.

We shall now take the meaning of terms: Global Skew and Local Skew.

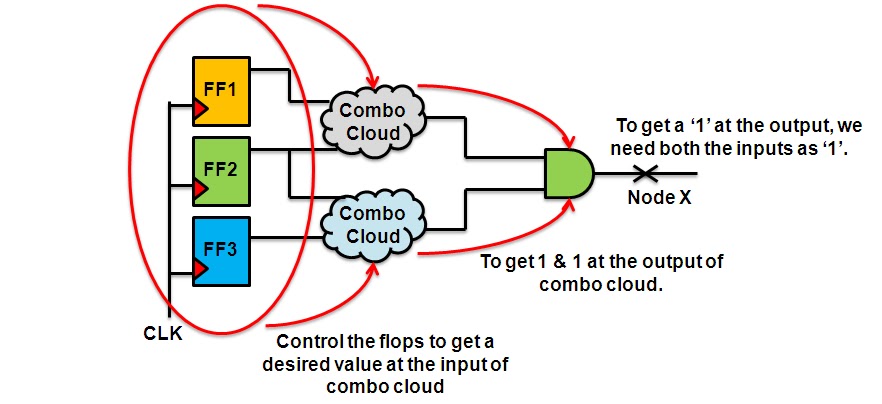

- Local Skew is the skew between any two related flops. By related we mean that the flops exist in the fan-in or fan-out cone of each other.

- Global Skew is the skew between any two non-related flops in the design. By non-related we mean that the two flops do not exist in the fan-out or fan-in cone of each other and hence are in a way mutually exclusive.